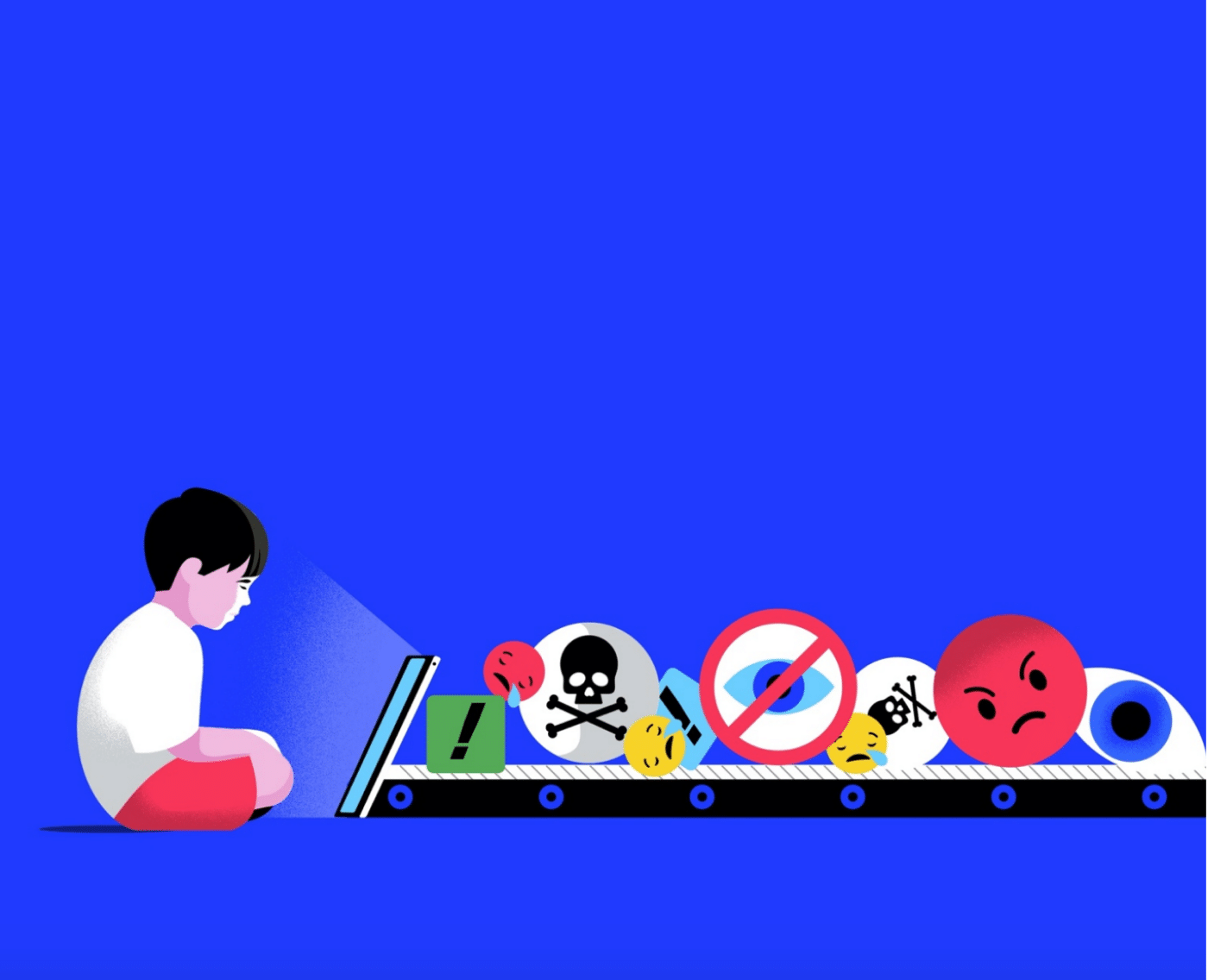

Child accounts shown harmful content within hours, new study finds

5Rights Foundation is calling for mandatory rules for design of digital services after new research shows online accounts registered to children are being targeted with sexual and suicide content.

The research, undertaken in partnership with Revealing Reality, establishes the pathways between the design of digital services and the risks children face online. It shows services such as Facebook, Instagram and TikTok are allowing children, some as young as 13 years old, to be directly targeted within 24 hours of creating an account with a stream of harmful content. Despite knowing the children’s age, the companies are enabling unsolicited contact from adult strangers and are recommending damaging content including material related to eating disorders, extreme diets, self-harm and suicide as well as sexualised imagery and distorted body images.

These services are not deliberately designed to put children at risk, but this new research shows the risks they pose are not accidental. These are not “bugs” but features. Revealing Reality interviewed engineers and designers who explained they design to maximise engagement, activity and followers — the three drivers of revenue, not to keep children safe.

To test a child’s experience, the researchers created avatars to simulate the experience without putting anyone at risk. These new accounts, each based on a real child, liked and searched for content and reflected the behaviour of the child on which they were based.

The research found:

- Within hours the avatar accounts were targeted with direct messages from adult users, asking to connect and offering pornography.

- Companies are targeting children with age specific advertising and also serving those same children suicide, self-harm, eating disorder and sexual content

- A child who clicks on a dieting tip, by the end of the week, is recommended bodies so unachievable that they distort any sense of what a body should look like

- A child that tells their true age, however young, is offered content and experiences that in almost any other context would be illegal

- Many children in this research blamed social media for negative and challenging experiences they had faced growing up surrounding body image and relationships

This Pathways research connects the dots between how digital products are designed, and the impact they have on the lives of children.

5Rights Foundation Chair Baroness Kidron said: “The results of this research are alarming and upsetting. But just as the risks are designed to the system, they can be designed out. It is time for mandatory design standards for all services that impact or interact with children, to ensure their safety and wellbeing in the digital world.“

“In all other settings we offer children commonly agreed protections. A publican cannot serve a child a pint, a retailer may not sell them a knife, a cinema may not allow them to view an R18 film, a parent cannot deny them an education, and a drug company cannot give them an adult dose of medicine. These protections do not only apply when harm is proven, but in anticipation of the risks associated with their age and evolving capacity. These protections are hardwired into our legal system, our treaty obligations, and our culture. Everywhere but the digital world.

“What the Pathways research highlights is a profound carelessness and disregard for children, embedded in the features, products and services of the digital world.”

Children’s Commissioner for England Dame Rachel de Souza added: “This research highlights the enormous range of risks that children currently encounter online. We don’t allow children to access services and content that are inappropriate for them (such as pornography) in the offline world. They shouldn’t be able to access them in the online world either. I look forward to working with the Government, parents, online platforms and organisations such as 5Rights to bring about an online world fit which is fit for children.”

Ian Russell, Founder, Molly Rose Foundation added: “This valuable report details how safety is routinely overlooked in the design of tech platforms that prioritise profit, with young users’ safety scarcely considered. Shockingly, Pathways demonstrates how algorithmic amplification actively connects children to harmful digital content, sadly as I know only too well, sometimes with tragic consequences.

“The report also reveals how young people feel about their online lives and how their offline lives are correspondingly altered. In our digital wilderness, young people need curated pathways to explore, allowing them to roam while remaining safe. Routes to trusted areas of support, especially in connection to mental health, should be better signposted so help can be provided whenever it is needed.

“All of us, governments, corporations, and individuals need to move fast to mend all things digital. We must find ways to weed out online harms and cultivate the good, if our digital world is to flourish as it should. Above all, we must prioritise safety, especially for children when online. We must work to prevent digital wolves, seeking out the vulnerable and destroying young lives.”