Meta’s rollback on safety measures puts children at risk

New changes announced by Meta will actively reduce existing protections for children. This is an irresponsible move – failure to implement systemic change must be challenged by regulators and policymakers worldwide as new laws and regulations come into force.

5Rights Foundation escalates legal action against Meta over AI-generated child sexual abuse material on Instagram

Meta continues to ignore the prevalence of Child Sexual Abuse Material (CSAM) hosted and promoted on Instagram. So, we have urged Ofcom and the ICO to take action.

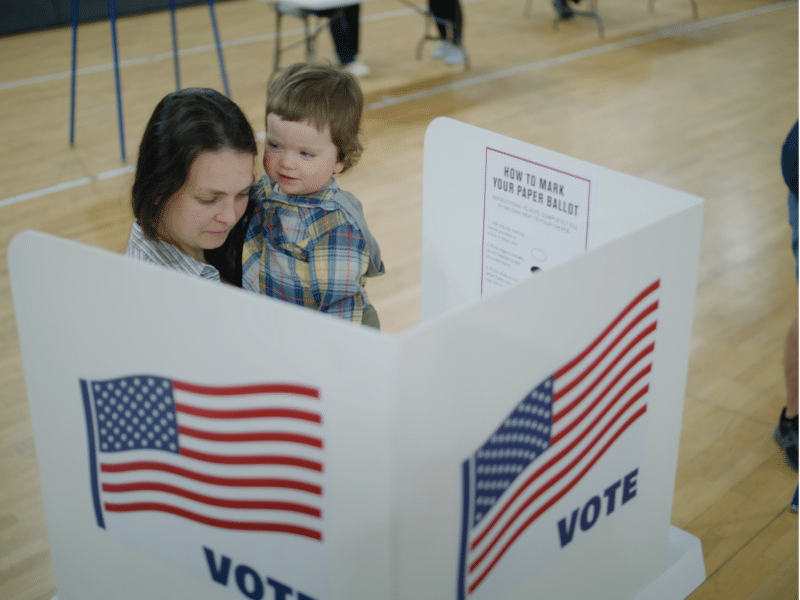

US elections: bipartisan support for youth online privacy and safety must continue

As the US prepares to enter a new legislative term, 5Rights calls for continued bipartisan support to advance children’s and teens’ privacy and safety online.

Instagram is not doing enough to keep children safe

In response to Instagram’s latest new safety features, 5Rights believes more should be done to ensure children are fully protected on the platform.

TikTok knows it is harming children

Internal TikTok documents reveal the company is promoting addictive design and targeting children, in full consciousness of the harms of its product

Australia: Children’s online safety measures must address systemic harms

Bold new proposals from the Australian government to ban under 16s from social media speak to the abject failure of tech companies to provide age-appropriate services.

Meta announces new changes for under 16s based on 5Rights principles

In line with the requirements of the Age Appropriate Design Code, Meta’s new privacy settings for teen accounts on Instagram are a sign of promise but more work is needed.

Supporting families globally: our work with The Parents’ Network

5Rights is partnering with Archewell’s Parents’ Network to work with families of children severely impacted by online harms to call for online spaces to be designed with the needs and rights of children in mind.

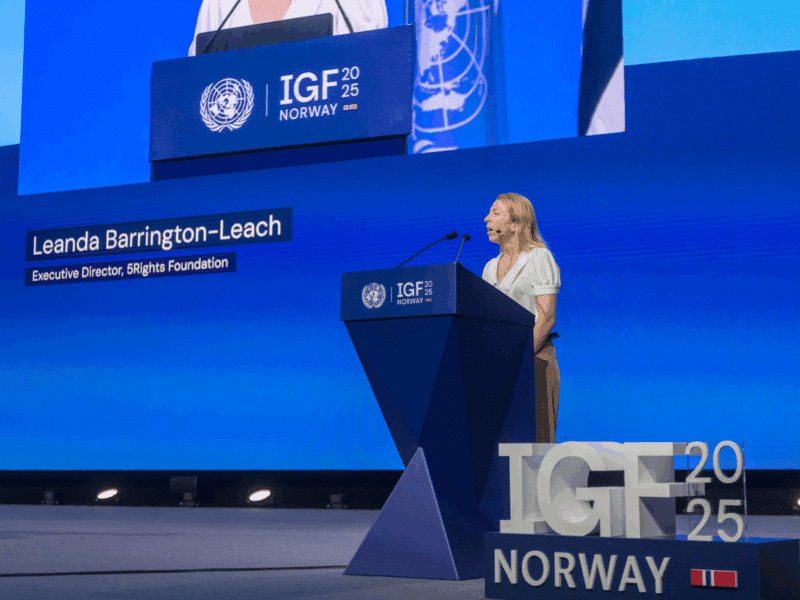

DSA turns 1: more potential for advancing children’s rights

Marking one year since the DSA’s enforcement for VLOPs, we look at the progress made by the European Commission and outline the need for strong guidelines and enforcement to protect child rights online.

5Rights challenges Meta’s inaction on AI-generated CSAM

Despite clear evidence from UK Police indicating the presence of Instagram accounts with over 150,000 followers sharing real and AI-generated Child Sexual Abuse Material (CSAM), Meta has failed to take decisive action. We have issued a legal letter demanding urgent intervention.

5Rights calls out Meta for ignoring AI-generated child sexual abuse material on Instagram

Child safety charity, 5Rights has sent a legal letter to Meta detailing how it has ignored reports of illegal child sexual abuse material (CSAM) on its platform, Instagram.

U.S. Surgeon General calls for action on young people’s mental health crisis

The U.S. Surgeon General is calling for warning labels on social media to alert to the fact that the services are “associated with significant mental health harms for adolescents”.