Overview

The UK, where 5Rights was founded, has pioneered digital regulation for children. It introduced the world’s first enforceable Age Appropriate Design Code in 2020, followed by the Online Safety Act in 2023, making it a key testing ground for policy innovation and implementation.

“A perfect digital world should be focused on online safety of the content. Every child should be informed about the type of content before they access it”

William, 15

Children’s experiences

Almost all 3-17-year-olds go online in the UK, mostly to watch videos, play video games, send messages to their friends and stay connected via social media. Nearly half of 11-year-olds who go online have a social media profile, despite a minimum age requirement of 13 for most social media sites. While watching videos, children are exposed to many advertisements and encouraged to spend cash as they are playing online games. Grooming cases and self-generated child sexual imagery are also on the rise, especially for younger children. 5Rights works hard to advocate that digital spaces likely to be accessed by children provide them with content and experiences appropriate to their age and evolving capacities.

Our work in the UK

5Rights works closely with policy makers and regulators and leads the work of the Children’s Coalition for Online Safety. We also partner with Bereaved Families for Online Safety to keep children’s online safety at the forefront of the political agenda. In partnership with the London School of Economics, 5Rights launched the Digital Futures for Children centre, dedicated to researching a rights-respecting digital world for children.

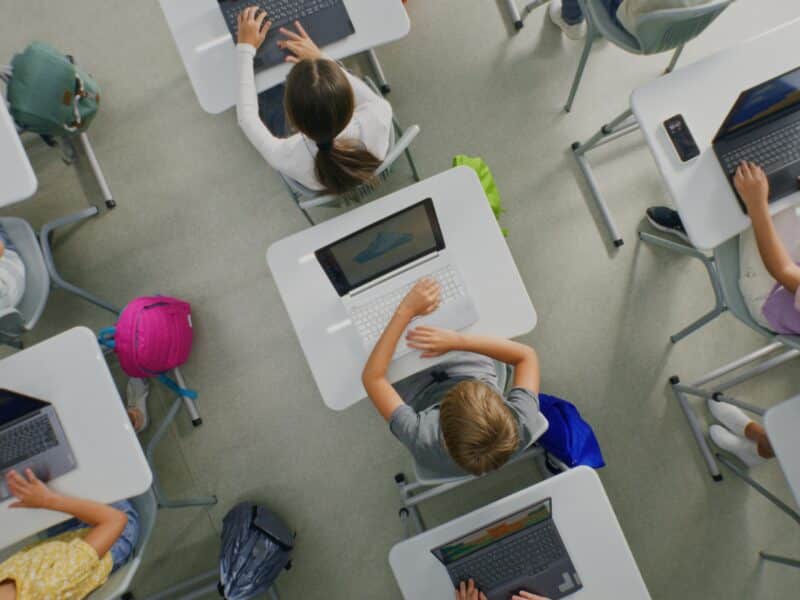

As part of our joint work with the Digital Futures for Children Centre, we are launching the Better EdTech Futures for Children project, which brings together young people across the UK to explore how technology and AI are shaping the classroom and to advocate for a more rights-respecting digital learning environment.

In focus

View all

Latest

View all

UK Government takes aim at manipulative digital design practices

The UK Government has announced new measures to strengthen online protections for children and young people, with a clear focus on tackling addictive and harmful digital design practices.

5Rights Youth Ambassadors give evidence to UK Parliamentarians on AI

5Rights Youth Ambassadors Eashaa and Niranjana, represented 5Rights at the UK Parliament this week, giving evidence to an inquiry of the All-Party Parliamentary Group (APPG) for Online Safety examining the impact of artificial intelligence on children.

Social media–style design is already in the classroom, new research finds

As Parliament debates banning children from social media, new research reveals that many of the same harmful design features are already embedded in the technology children use every day at school, raising concerns for children’s privacy, wellbeing and exposure to commercial exploitation in the classroom.

Next steps for online safety in the UK: 5Rights sets the criteria for legislative action

In the coming weeks, Parliament will debate proposals on social media bans for under‑16s – a critical moment to strengthen the UK’s online safety framework. As children, parents, and civil society call for more ambitious protections, 5Rights Foundation is setting out clear criteria to ensure regulation delivers real‑world change.