Reducing the risk of misinformation

New case study illustrates role of design features in causing harm, and ways to prevent it.

The digital world has accelerated the spread of false information and increased its reach. In a new Risky By Design case study, 5Rights highlights eight of the features that contribute to the spread of misinformation.

Misinformation impacts large numbers of children with 55% of 12–15-year-olds reporting they have seen a false news story. This can have dangerous and immediate consequences with significant long-term effects that influence their relationship with the world. For example, 60% of children report they trust news less as a result of ‘fake news’. A lack of trust in the internet, news and political processes are deterrents to civic engagement among young people. Misinformation can also impact the health of young people. One in five 16-24- year-olds think there is no hard evidence coronavirus actually exists.

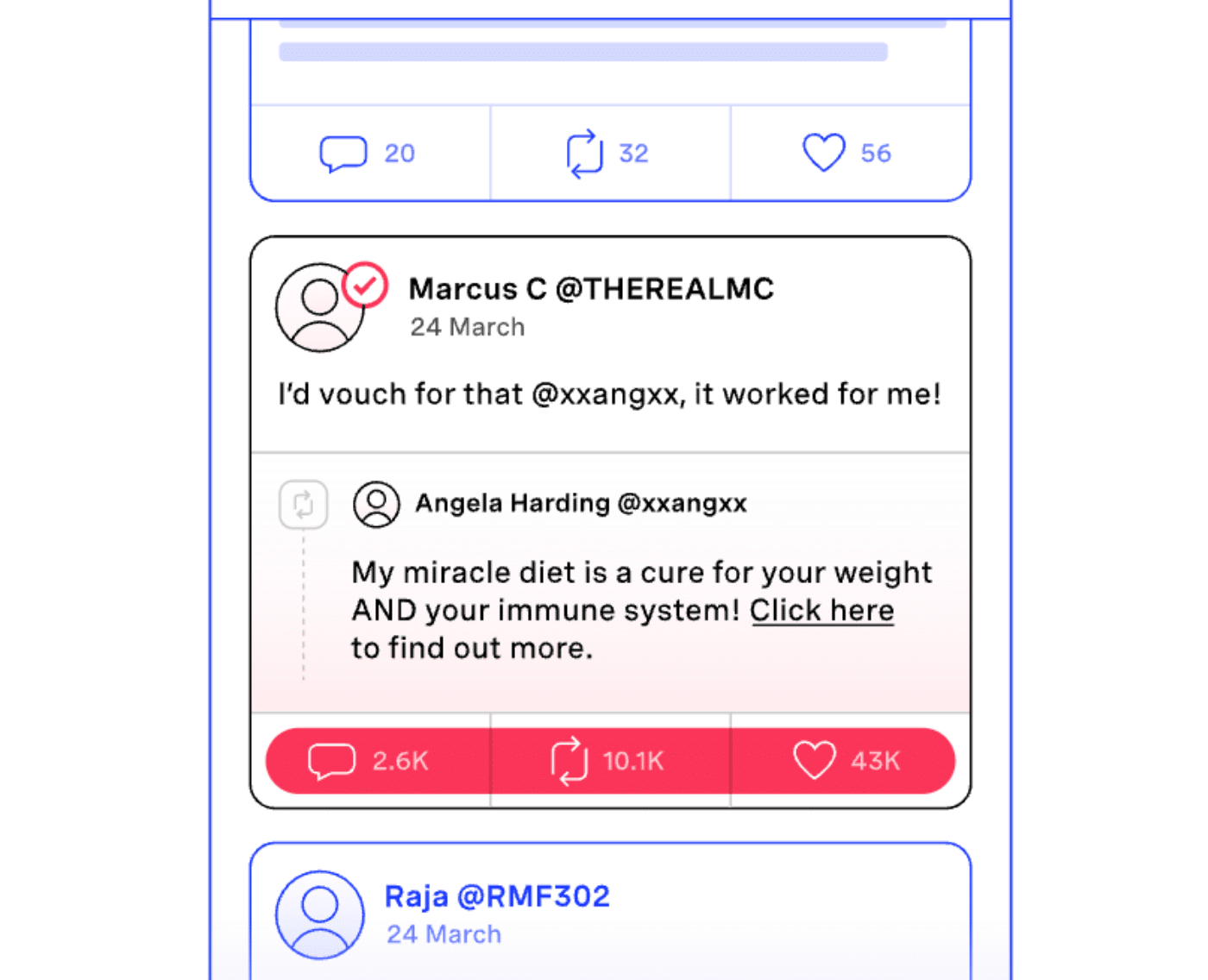

This new case study looks beyond existing narratives about ‘bad actors’ to consider the design features that increase the spread and reach of misinformation across services that are optimised to capture attention and extend engagement. The new case study flags the contribution made by design features like popularity metrics and recommendation systems – so called dark patterns – to the increasing spread of misinformation. The research also considers the role of autoplay features, trending lists, fake accounts, disappearing content and seamless sharing, and offers recommendations to platform developers to help minimise risk, and instead help to build the digital world that young people deserve.

Risky by design examines common design features that create risk in a series of case studies. They are not based on any one service, but each highlight how these design features pose risks to young people.

Every digital service or environment is the product of a series of design decisions that shape the experiences of young people. Low default privacy settings make a child’s profile public and their identity and interests visible to strangers. Dark patterns nudge them to give up more data. False choices mean they spend money on in-game purchases in their favourite games. These design features are not neutral. They are driven primarily by commercial interests and can, individually or in combination, cause risks that can lead to harm.

You can view the new case study here, and read the accompanying briefing here.