Digital Services Act mandates higher levels of privacy, safety and security for children

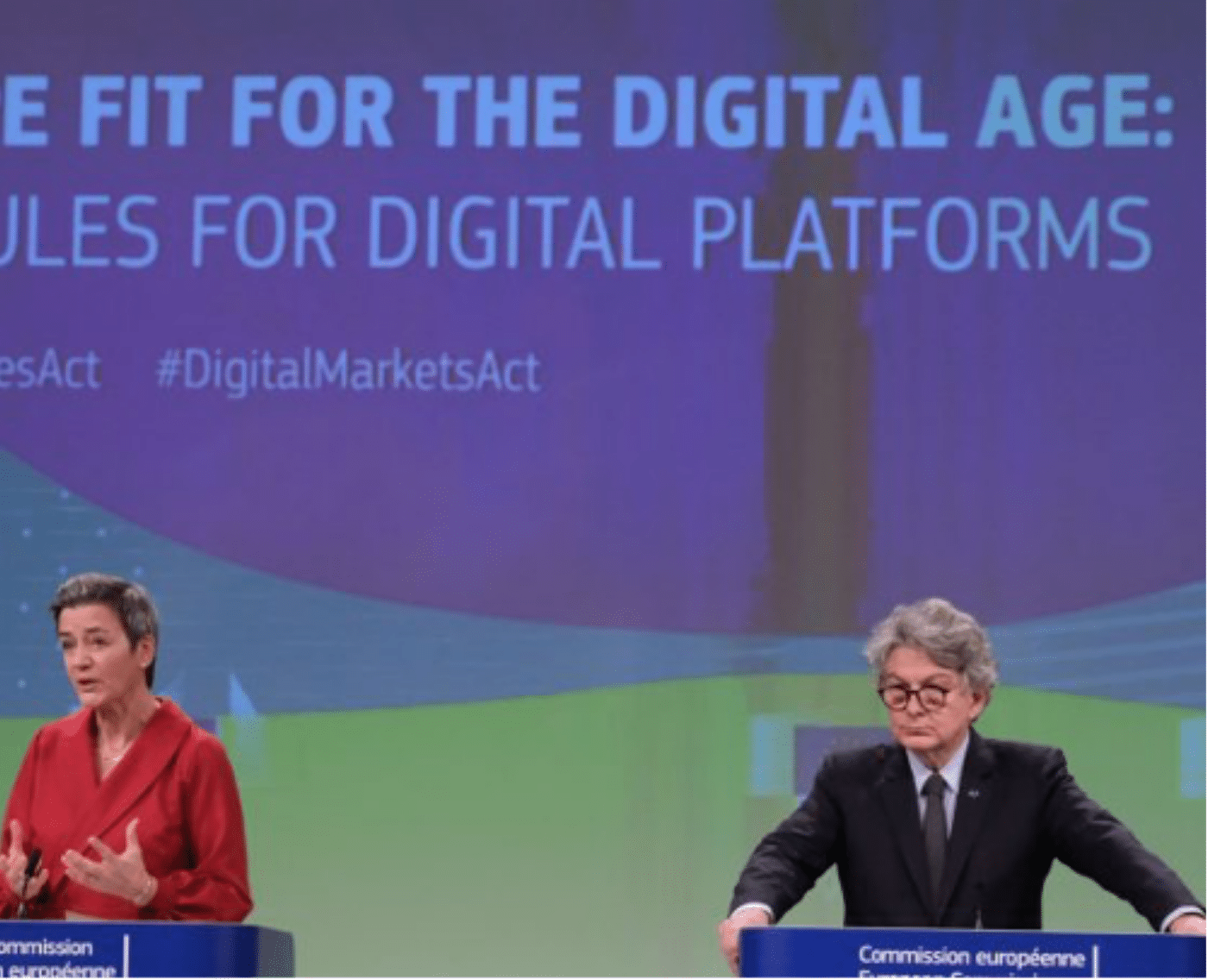

Just in time for World Children’s Day on 20 November, the EU’s landmark Digital Services Act (DSA) will come into effect next week, setting a new global standard for risk management, content moderation and transparency by tech companies. Directly applicable across all EU member states as well as Norway, Iceland and Liechtenstein, the DSA imposes strict obligations on companies offering their services to European consumers. When Elon Musk acquired Twitter last week and announced “The bird is freed”, European Commissioner Thierry Breton responded: “In Europe, the bird will fly by our rules.”

Provisions for children’s rights

The DSA is a landmark law for all consumers, not least for children. It requires all service providers to respect human rights and the rights of the child. It obliges all online platforms (defined as anything with a user-to-user function) to “put in place appropriate and proportionate measures to ensure a high level of privacy, safety, and security of minors, on their service” – a provision to be underpinned by the development of standards. Platforms are also prohibited from showing ads to children based on profiling or using “dark patterns” to influence user choices.

Very large online platforms (with more than 45 million average monthly active users – so the likes of Youtube, Meta and Tiktok) are in for even stricter regulation as of July 2023. They will have to undertake comprehensive risk assessments annually to measure any negative impact of their service on children’s rights or any impact on users’ physical and mental well-being. They must specifically examine risks related to the design of online interfaces, algorithms, and automated decision-making that may lead to addictive behaviour.

To mitigate any identified risks, very large online platforms must adapt design features, algorithmic design, terms and conditions, content moderation systems, recommendation systems, data practices – with the best interests of the child in mind.

All online platforms will be subject to transparency reporting setting out how they comply with due diligence obligations. Big tech will have annual external independent audits against a detailed list of criteria. Enforced centrally by the European Commission, breaches of the DSA by very large online platforms can entail fines reaching up to 6% of global turnover.

Next step: Enforcement

The Commission, together with the national Digital Service Coordinators – who will have the task of enforcing the DSA within their jurisdictions, including holding all services that do not meet the threshold of 45 million users to account – must now set out the detailed ground rules for services to comply, and specify how they will judge performance.

The most straight-forward way to do this is to use a product safety framework, with technical standards, accompanied by conformity assessments, that companies can pro-actively implement and be judged against. The IEEE Standard 2089 for Age Appropriate Design Digital Services Framework provides a strong basis for an EU standard to underpin the clauses of the DSA related to children’s rights.

Developed by the international industry body The Institute of Electrical and Electronics Engineers Standards Association in collaboration with 5Rights, the Standard introduces practical steps that companies can follow to design digital products and services that are age-appropriate.

The IEEE Standard equips designers with the tools they need to innovate towards the digital world that children and young people deserve. It should be the starting point for regulators at the EU and national levels to implement the DSA’s provisions for children. Additional tools, such as our guidelines on Age Appropriate Terms and Conditions, upcoming template for Child Rights Impact Assessments and 4-step model for AI oversight, complete the package.

The EU has made headlines passing the DSA, but whether or not it makes history will depend on its ability to leverage the word of the law to deliver practical changes in the design of digital systems and a better experience for children. Global tech companies have already demonstrated their ability to deliver on age-appropriate design in conformity with in UK and Californian law as well as statutory requirements in Ireland. The EU now has the legal basis and the practical tools to swiftly roll out those benefits to the millions of children within its borders, and consolidate a global standard that will drive responsible innovation and a better internet for all.