EU AI Act enters into force: A crucial step for child protection

The EU AI Act, a groundbreaking piece of legislation aimed at regulating artificial intelligence within the European Union, has officially entered into force today. This is a major step towards ensuring that AI is developed and used responsibly, especially when it comes to protecting children.

The Act is a comprehensive regulatory framework that categorises AI systems based on their level of risk. By explicitly recognising children’s rights as outlined in the General Comment No. 25 to the UN Convention on the Rights of the Child, this law sets a strong precedent for tech regulation. Whether or not it will drive actual change for young people, however, will ultimately depend on how effectively it is implemented.

A Framework for Child Safety in the AI Era

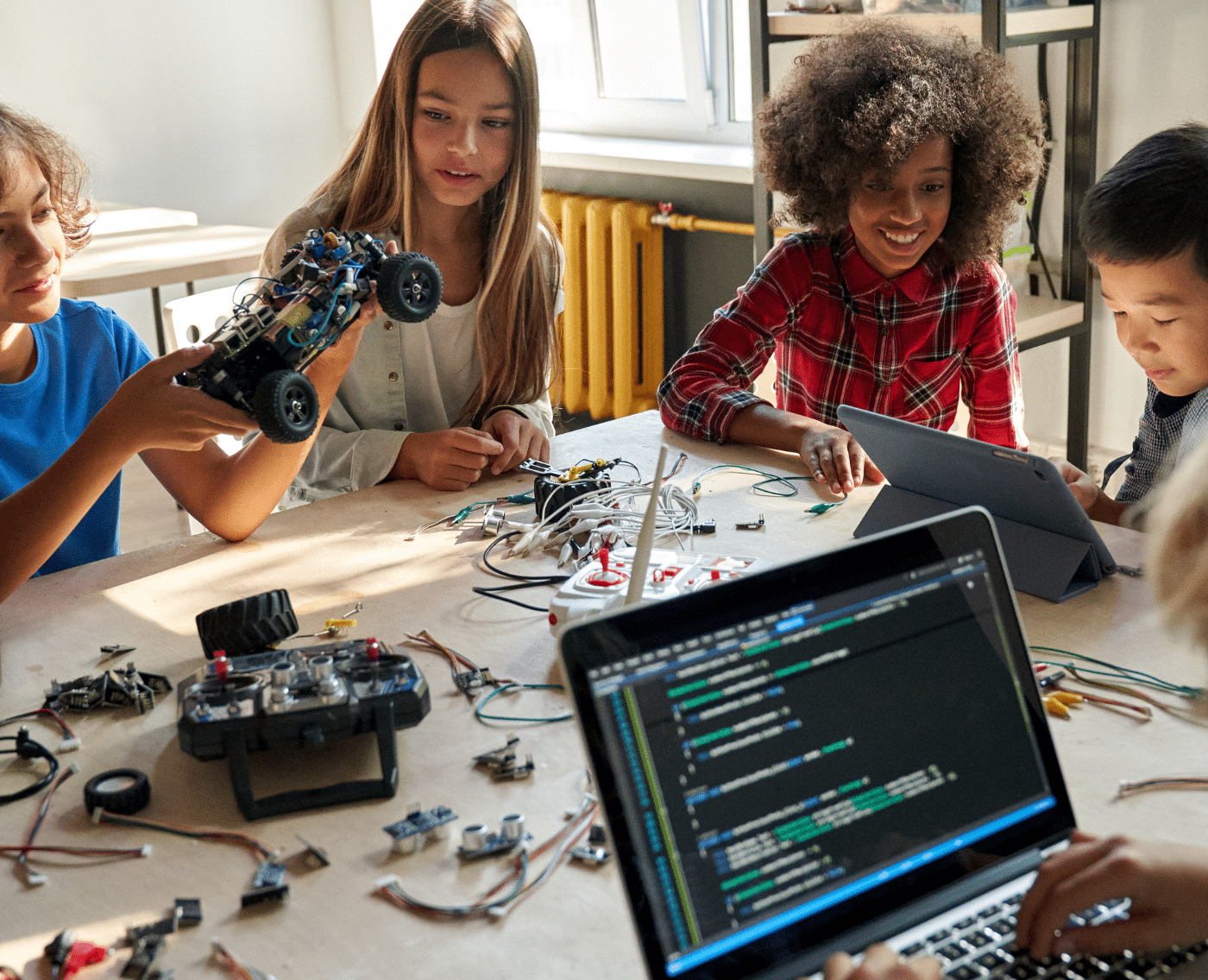

As early adopters of technology, children increasingly interact with AI systems embedded in various platforms and services they use for education, entertainment, social interactions and more. For instance, AI-driven recommender systems curate content to maximise user engagement, potentially exposing children to inappropriate or harmful material.

A central goal of the AI Act is to safeguard children from the specific vulnerabilities they face in the digital environment.

A key provision is the outright ban on AI systems that exploit the vulnerabilities of age. Additionally, the requirement for rigorous risk assessments for “high-risk” AI systems, including those used in education, is a positive development. Finally, by mandating transparency through watermarking deepfakes and informing users about AI interactions, the Act also empowers individuals to make informed decisions.

From Policy to Practice

While the EU AI Act is a promising framework, its success hinges on robust implementation. The European Commission, together with national authorities, must develop clear and stringent guidelines to operationalise the Act’s provisions, especially those related to child protection.

Crucially, the ban on AI systems exploiting children’s vulnerabilities must be must be strictly enforced. The definition of ‘vulnerabilities due to age’ should encompass all individuals under 18, and the mechanisms for identifying and addressing such exploitation must be clearly outlined.

Similarly, risk assessments for high-risk AI systems must prioritise children’s safety. These assessments should meticulously evaluate potential harms to children and incorporate robust safeguards to mitigate risks.

Building a Safer Digital Future Together

The EU AI Act enters into force today, marking the beginning of a complex implementation process. The coming months and years will be crucial as policymakers, industry, and civil society work together to shape the Act’s practical application.

5Rights Foundation is committed to monitoring the implementation process closely and advocating to ensure that the principles enshrined in the UN Convention on the Rights of the Child and its General Comment No. 25 are fully integrated into the guidelines, codes of conduct, and technical standards that will bring this regulation to life.

The EU AI Act offers a unique opportunity to create a safer online environment for children. By effectively implementing the Act’s provisions, Europe can lead the way in developing AI that respects and upholds children’s rights.